No new features

John Siracusa describes this new system in enormous detail (23 pages packed with information) and I must say I’m deeply deeply impressed by what each of those zero features brings with it in terms of technological advances and capabilities.

If you’re interested in the technology of the Mac, this is a must read. The advances - both current and planned - under the hood are phenomenal. I find it very inspiring to see so much forward-looking design focus in a mainstream product.

Shelved

Web apps

Development workflow

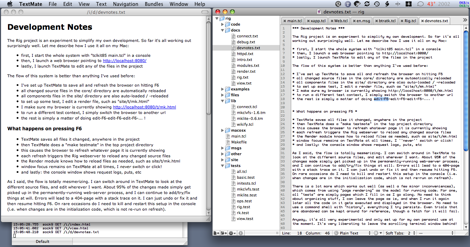

Click for full image - my real setup uses larger windows, filling an 1680x1050 screen.

The top-left is a Camino browser, with underneath it an iTerm window showing the stdout/stderr log. On the right is the TextMate editor. This is all MacOSX, clearly.

The text on the screen explains a bit what's going on. The reason this works so well, is that I'm developing inside a running process - something people working in Common Lisp and Smalltalk/Squeak take for granted. To work on some stuff, I add a new test page with a mix of comments and embedded Tcl calls. The central motto here is: Render == Run. Unlike interactive commands, the test page does all the steps needed to reach a certain state, and shows any output I want to see along the way. On a separate page in the browser, I can browse through all namespaces, variables, arrays, open channels, loaded packages, and more (what "hpeek" did, but far more elaborate). Apart from crashes and startup-related changes to the code, the server-based development process is never stopped. I can add calls to try out new code, and then decide after-the-fact what details to look into. Then, I just edit and hit F6 to fix or finish the code, whatever. Last but not least, one of the test pages runs a Tcl test suite, which gets added to as soon as the newest code stabilizes a bit. So again, it's a matter of keeping that page open in the browser and hitting F6.

This approach has already paid for itself many times over (the "cost" being only my time spent on it, evidently).

Good company

See Tim Bray's weblog for more."Now that the best and the brightest have spent a decade building and debugging threading frameworks in Java and .NET, it’s increasingly starting to look like threading is a bad idea; don’t go there. I’ve personally changed my formerly-pro-threading position on this 180º since joining Sun four years ago."

Update: more good company - Donald Knuth has this to say:

and about multicore:I won’t be surprised at all if the whole multithreading idea turns out to be a flop, [...]

Read the interview for context.How many programmers do you know who are enthusiastic about these promised machines of the future? I hear almost nothing but grief from software people, [...]

Web apps

It's particularly interesting to see how the workflow comes together with just TextMate and Safari, there is no explicit compile or even save, it's all based on a single mechanism: Render == Run. Which, as it turns out, is exactly how I've been doing my own small-scale web app development - based on an internal package called Mavrig. This is the way to build web apps, if you ask me, all the way to the deployment approach used at the end of the above video.

Ok, so "all" I have to do now is finish Vlerq and Mavrig, to show how Tcl + Metakit technology can achieve the same effectiveness, but with an order of magnitude less complexity than Python + Django (+ SQLite in local mode, presumably).

I wonder how long it'll take for this to show up on iPhones and iPod Touches...

Nightmare scenario

The Vlerq project is about very high level concepts as well as the close-to-raw-silicon implementation. After nearly four decades of exposure to computing technology, I consider Lisp the most powerful production-grade environment available today for taking very high level concepts to a runnable form. A system such as SBCL combines everything into one system which bridges an extra-ordinary range of interactivity (through Slime and Emacs), excellent introspection and debugging, and machine-code generation, all in one. I envy the masters who know how to live inside SBCL and are able to perform magic in a world which brings together extreme abstraction and raw performance.

Problem #1 is that SBCL is not deployable, let alone embeddable in other languages (a separate process is tricky for robust deployment, and SBCL is a pretty large environment to drag along). For something as general-purpose as Vlerq, being tied to a little-used system is a big issue. Problem #2 is that I have far too little experience with both SBCL and Emacs to be truly productive with them in the next years (yes, it takes years to make app, code, and bits sing, in my experience).

Problem #1 could be addressed by generating code for another system, such as the (delightfully Scheme-like) Lua language, along with C-coded primitives for all performance-specific loops and bit-twiddling. But that means problem #2 will sting even more: now I not only have to become proficient with SBCL, I also need to implement complex code on multiple levels, so that the generated Lua + C source code flies well.

It gets worse: problem #3 is that Lua is not really rich enough yet in terms of application-level libraries. In fact, I consider Tcl to be one of the best application-level languages around (yes, above even Python and Ruby) because of its excellent malleability and the way it supports domain-specific languages. Like Lisp, Tcl melds code and data together in a very fluid way - meaning it allows you to bring the design towards the app, instead of the other way around. It's ironic that Lisp and Tcl share this property, but at totally opposite ends: Lisp on the algorithmic side and Tcl on the application glue side.

So what's the nightmare? Well, to be truly effective in such a context, I'd have to be a master in SBCL, Emacs, Lua, C, and Tcl. And I'm not. I've been feeling the pain for a decade now. And I just don't know whether I should aim for such a context, or just muddle along in one or two technologies - with all the limitations associated with them.

I'd love to be proven wrong, but those who say "use language X" probably don't understand what breadth of conceptual / performance gap I'm trying to bridge. In a way that works outside a laboratory setting, that is.

LuaVlerq 1.7.0

This is Lua-only, since Lua is now tightly integrated into Vlerq (or is the other way around?). The intention is to later add thin language wrappers for additional language bindings.

I'm pleased with the 1.7.0 code. Despite its immature status, it's small and its snappy - both are essential starting conditions for me.

On track

I'm very pleased with v7 because it includes (early) code for supporting missing values and mutable/translucent view layers. This is where the v4 design had reached its limits. And the whole code base is only around 5K lines of C plus 200 lines of Lua. Several design choices, particularly the main internal data structures, have stood the test of time and have survived virtually as is in all the last rewrites. With a fair amount of functionality implemented, this indicates that the core design is sound (even if some code is still pretty ugly).

Onwards!

Sculpting

That's an elegant and accurate way of describing the software design process. And as the author of "Hackers and Painters", he ought to know.The Platonic form of Lisp is somewhere inside the block of marble. All we have to do is chip away till we get at it.

OOPs

It's Déja Vu all over again... the same discussion has been raging for years in the Tcl community. Both languages are more general than just OOP. And both "suffer" from a perceived lack of it as a consequence.

In my opinion, OOP is not all it's cranked up to be. When you're creating new DSL layers or doing the kind of meta-programming Lisp is famous for, then forcing everything into OO turns it into a straight-jacket - limiting ways in which to think about abstraction. Or to put it differently: yes, OO is great to model real-world objects, but that doesn't carry over to DSL design and some forms of abstraction/decomposition.

(full disclosure: I've been going through SICP again recently, and the meta-circular evaluator and Y-combinator - fantastic stuff)

Code bloat

The difference being only that something of half a million lines of code needs to consist of a core/engine which makes it easy to write the rest in at least an order of magnitude less lines of code. Today's TLA for that approach is "DSL" - a concept which only few languages adequately support, IMO: Lisp/Scheme par excellence, along with FORTH and Tcl (and Ruby + Lua trying).

Languages

APL - the terse language

C - the implementation language

Forth - the lowest high-level language

Java - the sellable language

JavaScript - the omnipresent language

Lisp - the malleable language

Lua - the RISC-like language

Perl - the TIMTOWTDI language

Python - the maintainable language

Ruby - the upcoming language

Tcl - the deployable language

Absent in this summary are the FP languages, as I know too little about them.

Iteration

This, in a nutshell, is one of the properties of the Vlerq project. And yes, I've been thinking about a revised design...

Am currently taking notes (on paper, the ultimate sketching environment!) and mulling over some core data-structure design changes.

This time, I'm looking into supporting missing values from the ground up. Looks like it could simplify a number of issues. Without leading to "null values" or three-valued logic. It can actually conform to Tcl's everything-is-a-string mantra, even though a missing value is not representable as a string.

Uh, oh...

No rush, though: 1) I have no time for it right now, 2) the current Ratcl code is humming along nicely, 3) still can't wrap my mind around some of the more advanced aspects, so there's a definite risk of failure.

(Having said that, none of the above has stopped me before. Will just have to wait and see!)

Spreadsheet logic in Python

The Trellis "sees" what values your rules access, and thus knows what rules may need to be rerun when something changes -- not unlike the operation of a spreadsheet.But even more important, it also ensures that callbacks can't happen while code is "in the middle of something". Any action a rule takes that would cause a new event to fire is automatically deferred until all of the applicable rules have had a chance to respond to the event(s) in progress. And, if you try to access the value of a rule that hasn't been updated yet, it's automatically updated on-the-fly so that it reflects the current event in progress.No stale data. No race conditions. No callback management. That's what the Trellis gives you.

No threads

- chances of a coding mistake are way too high

- complete testing of race conditions is next to impossible

- full code review in OSS projects with plugins/extensions is not feasible

- failures are not repeatable, i.e. essentially non-deterministic

- one weak link can bring down the application

No thanks - I'll be sticking to async I/O (one of Tcl's strengths) and pipe- / socket- / mmap- / shmem-connected processes.

Tclkit 8.4.15 and 8.5a6

Metakit 2.4.9.7

Belt and suspenders

Erlang

Smalltalk

Fast forward to today and Alan Kay explains how this might just be the tip of a very new iceberg (PDF). As can also be inferred from the name of a new mailing list, btw. There's also a video (sound gets better after 20' or so).

It's slightly unfortunate that several developments with a potentially fascinating connection to Vlerq all come from a different setting (Lisp, Smalltalk, APL) and that my mental bandwidth and time simply isn't up to giving each of these the deep attention they really deserve. Oh well, I can do my best - but no better...

(With thanks to Arto Stimms for passing on several great pointers)

1975 programming

I find it fascinating that 30 years on, his 2006-dated comments are still as important to refer to as ever. More so perhaps, with programmers coming on board from a strictly scripting-language perspective.

Bisection bug search

Functional Programming

More articles by the same author are here. I can't figure out who the author is, other than... By day I'm a Java developer at a mid-size Wall Street firm.

Flip the coordinates

How mis-guided.

In Fortran, collections of structs were often simulated by allocating arrays for each element, and indexing across multiple arrays to access struct fields. Somehow, we seem to have forgotten about the performance benefits that can give. I'll be even bolder and state that we're squandering huge amounts of performance by insisting on placing all fields of an object in adjacent memory slots.

Maybe one day others will see the light and figure out what Metakit and Vlerq are all about...

Update: See a related paper by Steve Johnson. Thx Andreas Kupries for the reference.

Cohesion

Mac development

Globals

I'm referring to the fact that software increasingly depends on pieces spread all over my hard disk, with "configuration files" everywhere. Pick any non-trivial developer tool - the problem is not just that it's hard to get things working properly, but that any configuration change later may break things.

If that isn't global state (and the worst engineering approach ever!), then I don't know what is ... What a mess.

Alan Kay

When people react instantly, they're not thinking, they're doing a table lookup.

Not particularly relevant, but a neat quote nevertheless... Here's another one I wholeheartedly agree with:

Lisp is the most important idea in computer science.

The one thought I came away with after reading this was: thinking takes time.

osh

EvilC

W3 intro's

Impressive coding

Exceptions

If you're running under Windows XP, consider converting the exception into a null pointer dereference in the catch handler:

catch(...) { // now we're really stuffed int* p

= 0; *p = 22;

This has the advantage over an ordinary crash that you will get one of those special OS-supplied dialogs, that asks permission to send log details back to Microsoft. Naive users will interpret this as a Windows fault, and will direct their bile Redmondwards.

Lisp Universal Shell

If you do research and development in signal processing, image processing, machine learning, computer vision, bio-informatics, data mining, statistics, simulation, optimization, or artificial intelligence, and feel limited by Matlab and other existing tools, Lush is for you.

From Java to Rails

Yikes

Knuth

Interactive excution

...

As you can see, there are quite a bit of things one can do when an error is thrown. Clever - and I assume very useful while writing new code or just for trying out things before writing tests and code.

Data dominates

Data dominates. If you've chosen the right data structures and organized things well, the algorithms will almost always be self-evident. Data structures, not algorithms, are central to programming.

It's rule 5 on this page (which in turn came from Ivan Lazarte's comment on the wiki ).

Second place, by Ken Thompson, from the same page:

When in doubt, use brute force.

Could be a mantra for Vlerq, that one!